“Students will learn by inhabiting an alternate history where Alan Turing and Richard Feynman meet during World War II and must invent quantum computers to defeat Nazi Germany. As a final project, they will get to program a D-Wave One machine and interpret its results.”

If you are based in Seattle then you want to keep an eye out for when Paul Pham next teaches the Quantum Computing for Beginners course that follows the exciting narrative outlined above.

For everybody else, there is EdX‘s CS191x Quantum Mechanics and Quantum Computation course. I very much hope this course will a be a regular offering. Although it lacks the unique dramatic arche of P.Pham’s story line this course is nevertheless thoroughly enjoyable.

When I signed up for this course, I didn’t know what to expect. Mostly, I decided to check it out because I was curious to see how the subject would be taught, and because I wanted to experience how well a web-based platform could support academic teaching.

This course fell during an extremely busy time, not only because of a large professional work load, but also because the deteriorating health of my father required me to fly twice from Toronto to my parents in Germany. Despite this, the time required for this course proved to be entirely manageable. If you have an advanced degree in math, physics or engineering, and want to learn about Quantum Computing, you shouldn’t shy away from taking this course as long as you have an hour to spare each week. It helps that you can accelerate the video lectures to 1 1/2 normal speed (although this made Prof. Umesh Vazirani sound a bit like he inhaled helium).

Prof. Vazirani is a very competent presenter, and you can tell that a lot of thought went into how to approach the subject, i.e. how to ease into the strangeness of Quantum Mechanics for those who are new to it. I was suspicious of the claim made at the outset, that the required mathematics would be introduced and developed as needed during the course, but it seems to me that this was executed quite well. (Having been already familiar with the required math, I don’t really know if it’ll work for somebody completely new to it, but it seems to me that indeed the only pre-requisite required was a familiarity with linear algebra).

It is interesting to see discussions posted by individuals who took the course and were apparently subjected to QM for the first time. One such thread started this way:

“I got 100. It was really a fun. Did I understand anything? I would say I understood nothing.”

To me this illuminates the fact that you simply cannot avoid the discussion of the interpretation of quantum mechanics. Obviously this subject is still very contentious, and Prof. Vazirani touched on it when discussing the Bell inequalities in a very concise and easy to understand manner. Yet, I think judging from the confusion of these ‘straight A’ students there needs to be more of it. It is not enough to assert that Einstein probably would have reconsidered his stance if he knew about these results. Yes, he would have given up on a conventional local hidden variable approach, but I am quite certain his preference would have then shifted to finding a topological non-local field theory.

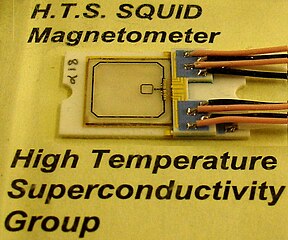

Of course, there is only so much that can be covered given the course’s duration. Other aspects there were missing: Quantum Error Correction, Topological and Adiabatic Quantum Computing and especially Quantum Annealing. The latter was probably the most glaring omission, since this is the only technology in this space that is already commercially available.

Generally, I found that everything that was covered, was covered very well. For instance, if you ever wondered how exactly Grover’s and Shor’s algorithms work, you will have learned this after taking the course. I especially found the homework assignments wonderful brain teasers that helped me take my mind off of more worrisome issues at hand. I think I will miss them. They were comprised of well thought out exercises, and as with any science course, it is really the exercises that help you understand and learn the material.

On the other hand, the assignments and exams also highlighted the strengths and weaknesses of the technology underlying the courseware. Generally, entering formulas worked fine, but sometimes the solver was acting up and it wasn’t always entirely clear why (i.e. how many digits were required when giving a numerical answer, or certain algebraically equivalent terms were not recognized properly). While this presented the occasional obstacle, on the upside you get the immediate gratification of instance feedback and a very nice progress tracking that allows you to see exactly how you are doing. The following is a screenshot of my final tally. The final fell during a week in which I was especially hard pressed for time, and so I slacked off, just guesstimating the last couple of answers (with mixed results). In comparison to a conventional class, knowing exactly when you have already achieved a passing score via the tracking graph makes this a risk- and stress-free strategy.

A common criticism of online learning in comparison to the established ways of doing things is the missing classroom experience and interaction with the professor and teaching staff. To counter this, discussion boards were linked to all assignments, and discussion of the taught material was encouraged. Unfortunately, since my time was at a premium I couldn’t participate as much as I would have liked, but I was positively surprised with how responsive the teaching assistants answered questions that were put to them (even over the weekends).

A common criticism of online learning in comparison to the established ways of doing things is the missing classroom experience and interaction with the professor and teaching staff. To counter this, discussion boards were linked to all assignments, and discussion of the taught material was encouraged. Unfortunately, since my time was at a premium I couldn’t participate as much as I would have liked, but I was positively surprised with how responsive the teaching assistants answered questions that were put to them (even over the weekends).

This is all the more impressive given the numbers of students that were enrolled in this course:

The geographic reach was no less impressive:

Having being sceptical going into this, I’ve since become a convert. Just as Khan Academy is revolutionizing the K12 education, EdX and similar platforms like Cousera represent the future for academic teaching.

Like this:

Like Loading...

For anybody needing an immediate dose of D-Wave news, Wired has this long, well researched article (Robert R. Tucci summarized it in visual form on his blog). It strikes a pretty objective tone, yet I find the uncritical acceptance of Scott Aaronson’s definition of quantum productivity a bit odd. As a theorist, Scott is only interested in quantum speed-up. That kind of tunnel vision is not inappropriate for his line of work, just an occupational hazard that goes with the job, but it doesn’t make for a complete picture.

For anybody needing an immediate dose of D-Wave news, Wired has this long, well researched article (Robert R. Tucci summarized it in visual form on his blog). It strikes a pretty objective tone, yet I find the uncritical acceptance of Scott Aaronson’s definition of quantum productivity a bit odd. As a theorist, Scott is only interested in quantum speed-up. That kind of tunnel vision is not inappropriate for his line of work, just an occupational hazard that goes with the job, but it doesn’t make for a complete picture.

. In it, he makes a very compelling case that we are due for another paradigm change. To me, the latter means dusting off some of Schrödinger’s original wave mechanics ideas. If we were to describe a simple quantum algorithm using that picture, there’s a much better chance to give non-physicists an idea of how these computation schemes work.